Discover 8 actionable best practices for continuous integration to streamline your dev workflow, automate testing, and deploy quality code faster. Learn more!

In today's fast-paced world, building software is like a relay race. If you fumble the baton pass between developers, everything slows down. That's where continuous integration (CI) comes in—it's the technique that makes those handoffs smooth, fast, and automatic. Mastering the best practices for continuous integration is what separates teams that release new features with confidence from those stuck fixing broken builds. It’s about catching bugs the moment they’re written, not days before a stressful launch. For a deep dive on this, check out our comparison of CI/CD tools.

This guide isn't about complex theory; it's a practical playbook for product and dev teams. We’ll explore battle-tested strategies like making small, frequent code commits to avoid the dreaded "merge hell," and why your testing environment must be a perfect clone of what your users see. Think of a startup that pushed a seemingly minor update, only to crash their payment system because their test setup didn't match production—a costly mistake CI best practices can prevent. Let's dive into the habits that turn a good development process into a great one.

Imagine two developers working on the same feature for two weeks without sharing their code. When they finally try to combine their work, it's a mess of conflicts that takes a whole day to untangle. This is "merge hell," and the best way to avoid it is to commit code frequently. This means developers push their small, incremental changes to the shared code repository multiple times a day. Instead of one giant, risky integration, you have dozens of tiny, safe ones.

This simple habit is one of the most powerful best practices for continuous integration. Each small commit triggers an automated build and test run, giving you feedback in minutes. If something breaks, you know exactly which tiny change caused it. It’s like proofreading a paragraph at a time instead of waiting to edit the entire book. Companies like Meta and Google built their entire engineering culture on this principle, successfully managing thousands of daily commits to a central codebase because the changes are small and easy to verify.

Getting a team into the habit of small, daily commits requires a bit of process and the right mindset.

Have you ever heard a developer say, "Well, it works on my machine"? This classic problem often happens when team members are working with different versions of code, tools, or configuration files. The solution is to maintain a single source repository—one central place for everything needed to build, test, and run the project. This isn't just for the application code; it includes build scripts, database changes, and documentation. It's your project's single source of truth.

When everyone pulls from the same place, you eliminate inconsistencies. It’s like a construction team working from a single, master blueprint instead of slightly different copies. This centralized approach makes it easier to make large-scale changes that touch multiple parts of the system. Tech giants like Google and Microsoft use this "monorepo" strategy to manage massive codebases because it provides total visibility and simplifies dependency management across thousands of projects. It ensures everyone is on the same page, always.

A single repository needs good organization to stay manageable. Here’s how to do it right:

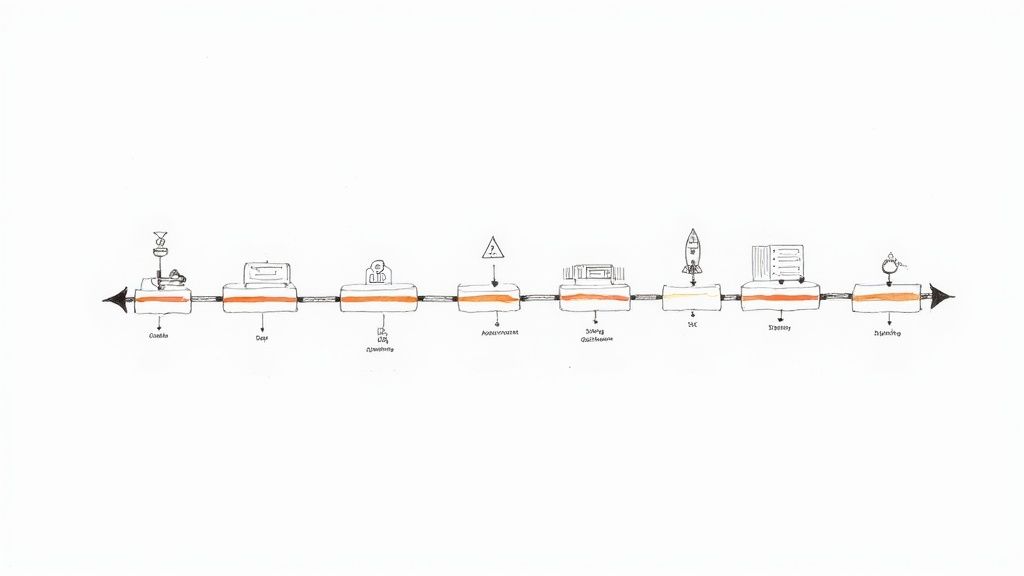

trunk, is a popular choice that pairs perfectly with CI. The goal is to keep the main branch stable and always ready to be deployed.The moment a developer commits new code, a series of things should happen automatically: the code is compiled, dependencies are fetched, and it’s packaged into a runnable application. This is the automated build, and it's a non-negotiable part of continuous integration. Without it, you're relying on manual steps that are slow, inconsistent, and prone to human error. Automation ensures that every build is done the exact same way, every single time.

A manual build process is a recipe for disaster. One developer might forget a step, or their machine might have a different software version, leading to a broken build that no one else can reproduce. An automated build, run by a CI server like Jenkins or a cloud service like GitHub Actions, is the impartial referee. It provides immediate, unbiased feedback: did this new code integrate successfully, or did it break something? Companies like Netflix depend on this automation to build and deploy hundreds of different services reliably every day. For a more detailed guide on practical implementation, our article on how Sopa uses CI/CD to build Sopa provides a real-world example.

Setting up a solid automated build is about consistency and speed. Here are some tips:

A successful build that compiles without errors is good, but it doesn't tell you if the code actually works. That's why one of the most important best practices for continuous integration is to make every build self-testing. This means that after the code is compiled, a suite of automated tests runs automatically. If any test fails, the build is immediately marked as "broken," and the faulty code is stopped in its tracks.

Imagine a developer accidentally introduces a bug that breaks the user login flow. Without a self-testing build, this bug might not be found for days, until a QA tester stumbles upon it. With a self-testing build, an automated test for the login flow would fail minutes after the bad code was committed, alerting the developer immediately. This transforms testing from a slow, manual phase into an integrated, real-time quality check. It’s how companies like Amazon can run millions of tests a day, ensuring that even the smallest change doesn't disrupt their massive e-commerce platform.

A good self-testing build is fast, reliable, and comprehensive.

If a developer has to wait 30 minutes to find out if their change broke anything, they'll stop committing frequently. They’ll batch up their work, and you’ll be right back in "merge hell." That's why keeping the build and test cycle fast—ideally under 10 minutes—is an essential CI best practice. A fast feedback loop keeps developers in the flow and encourages them to integrate their work continuously.

A quick build provides near-instant gratification. It tells a developer their change is good and they can move on to the next task. A slow build is a productivity killer. It creates a bottleneck where developers are waiting around, context-switching, and losing momentum. Major tech companies obsess over build speed for this reason. Google and Facebook invented their own custom build tools (Bazel and Buck) just to shave seconds and minutes off their build times, because at their scale, those saved minutes add up to thousands of hours of developer productivity.

Making your builds faster is a continuous effort, not a one-time fix. Here are some proven strategies:

package-lock.json or Gemfile.lock) to ensure dependency resolution is fast and consistent.Here’s a classic startup horror story: a new feature works perfectly in testing but crashes the entire site upon release. The cause? The production server had a slightly different version of a critical system library than the test server. This is why one of the most crucial best practices for continuous integration is to test in an environment that is a perfect clone of production. You need to ensure that what you are testing is exactly what your users will be running.

Testing in a production-like environment helps you catch tricky bugs related to configuration, network setup, or data differences before they affect real users. It builds confidence that if your code passes tests here, it will work in the wild. This idea was popularized by platforms like Heroku with their "Review Apps," which automatically create a temporary, live, production-like environment for every new feature branch. This allows developers and product managers to test the new feature in a realistic setting before it's merged.

Creating these environments used to be difficult, but modern tools have made it much easier.

If a build fails and nobody knows about it, the broken code sits in the main branch, blocking everyone else. This is why transparency is key. Making build results, test reports, and deployment statuses highly visible to the entire team is a critical CI best practice. When everyone can see the status of the pipeline at a glance, it creates a culture of shared ownership. A red (failed) build becomes everyone's problem to fix, not just the person who wrote the code.

Think of it like a factory assembly line. If a machine stops, a big red light flashes so everyone knows there’s a problem that needs immediate attention. In software, this "information radiator" could be a dashboard on a TV screen in the office or a dedicated channel in Slack. When a build breaks, the team should swarm on it to get it fixed quickly, keeping the pipeline flowing and the main branch healthy.

Getting this information in front of your team can be done in a few simple ways.

The final piece of the puzzle is extending automation all the way to your users. Automating your deployment process means that every successful, tested build can be pushed to a staging or even production environment with the click of a button—or no click at all. This practice turns deployment from a scary, manual, all-hands-on-deck event into a routine, low-risk, automated process.

When deployment is automated, you can release new features and bug fixes whenever you want, not just on a rigid schedule. It’s what enables companies like Amazon to deploy new code to production every few seconds. Automation removes the risk of human error—no more forgetting a step or copying the wrong file. It also improves security by ensuring that no one needs to manually log into production servers to make changes. This is a crucial element of a secure pipeline, which you can learn more about CI/CD pipeline security on heysopa.com.

Automating deployments should be done carefully, with safety nets in place.

Mastering continuous integration isn't about buying a new tool; it's about changing your team's habits and processes. By adopting these eight best practices for continuous integration, you create a powerful engine for delivering high-quality software quickly and reliably. From the simple discipline of committing small changes frequently to the technical challenge of automating deployments, each practice builds on the last to create a smooth, efficient workflow.

The goal is always to create a tight, fast, and informative feedback loop. Keeping your builds fast respects developers' time and keeps them productive. Testing in a clone of production builds confidence and prevents embarrassing launch-day failures. Making results visible and automating every step fosters a culture of shared responsibility and continuous improvement.

Adopting these practices pays real dividends, transforming how your team works and delivering concrete business value. A mature CI pipeline becomes the foundation for:

Ultimately, these best practices for continuous integration help you build a culture of quality and speed. When the entire team trusts the process, they can move faster, innovate more, and build better products. The journey is ongoing, but these principles provide a solid road map to success.

Implementing these best practices will fortify your development pipeline, but manual code reviews can still create bottlenecks. By integrating Sopa into your workflow, you can automate the detection of bugs and security risks directly within your pull requests, acting as an intelligent quality gate. Ready to elevate your CI strategy and ship with unparalleled confidence? Start your free trial of Sopa today and see how our AI-powered insights can transform your code review process.